- run it straight with

gguf-connector - opt a

gguffile in the current directory to interact with by:

ggc w2

GGUF file(s) available. Select which one to use:

- wan2.1-t2v-1.3b-q4_0.gguf

- wan2.1-t2v-1.3b-q8_0.gguf

- wan2.1-vace-1.3b-q4_0.gguf

- wan2.1-vace-1.3b-q8_0.gguf

Enter your choice (1 to 4): _

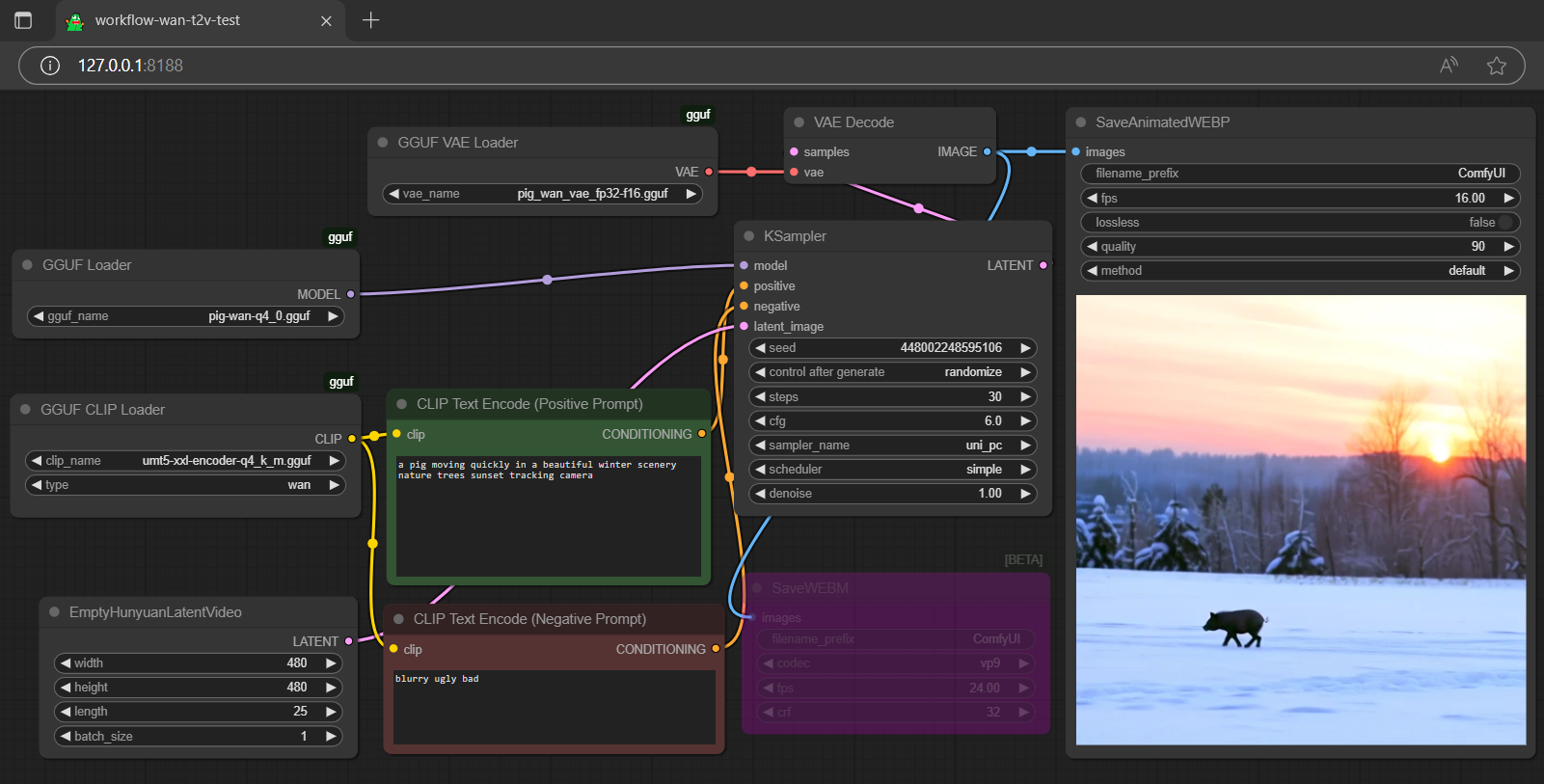

- drag wan to >

./ComfyUI/models/diffusion_models - drag umt5 to >

./ComfyUI/models/text_encoders - drag pig to >

./ComfyUI/models/vae

wanarchitecture; should work on both comfyui-gguf and gguf nodes- full set gguf works right away (model + encoder + vae); gguf node is recommended for full gguf

- vace model is recommended, since it doesn't need vision clip to work (for i2v and v2v) and run faster than fun model a lot, according to the initial test results

- upgrade your node for umt5 gguf encoder support

- note: for umt5 gguf, you might encounter oom after rebuilding your tokenizer in the first prompt (once built the tokenizer alive during the session; before you kill it); don't panic, prompt again it should work