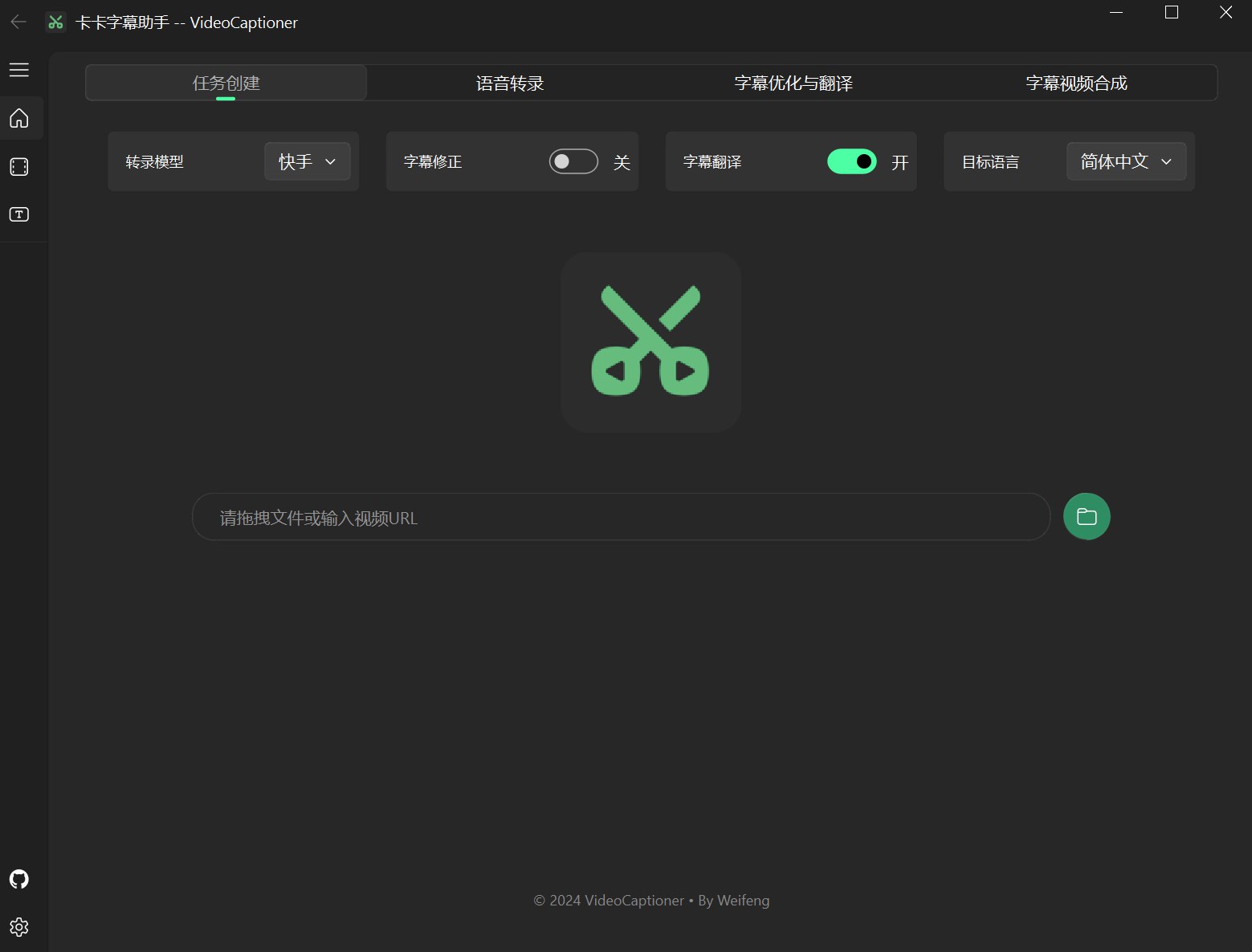

Kaka Subtitle Assistant

An LLM-powered video subtitle processing assistant, supporting speech recognition, subtitle segmentation, optimization, and translation.

Kaka Subtitle Assistant (VideoCaptioner) is easy to operate and doesn't require high-end hardware. It supports both online API calls and local offline processing (with GPU support) for speech recognition. It leverages Large Language Models (LLMs) for intelligent subtitle segmentation, correction, and translation. It offers a one-click solution for the entire video subtitle workflow! Add stunning subtitles to your videos.

The latest version now supports VAD, vocal separation, word-level timestamps, batch subtitle processing, and other practical features.

- 🎯 No GPU required to use powerful speech recognition engines for accurate subtitle generation.

- ✂️ LLM-based intelligent segmentation and sentence breaking for more natural subtitle reading.

- 🔄 AI subtitle multi-threading optimization and translation, adjusting subtitle format and making expressions more idiomatic and professional.

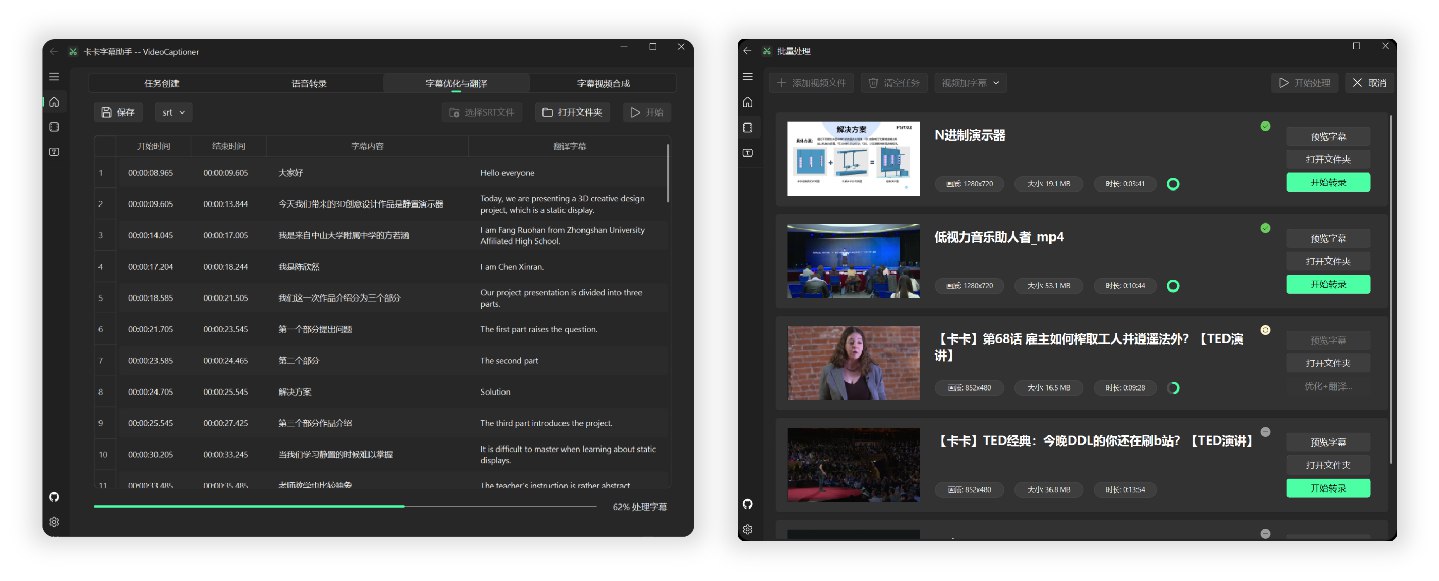

- 🎬 Supports batch video subtitle synthesis, improving processing efficiency.

- 📝 Intuitive subtitle editing and viewing interface, supporting real-time preview and quick editing.

- 🤖 Low model token consumption, and built-in basic LLM model to ensure out-of-the-box use.

Processing a 14-minute 1080P English TED video from Bilibili end-to-end, using the local Whisper model for speech recognition and the gpt-4o-mini model for optimization and translation into Chinese, took approximately 3 minutes.

Based on backend calculations, the cost for model optimization and translation was less than ¥0.01 (calculated using OpenAI's official pricing).

For detailed results of subtitle and video synthesis, please refer to the TED Video Test.

The software is lightweight, with a package size of less than 60MB, and includes all necessary environments. Download and run directly.

-

Download the latest version of the executable from the Release page. Or: Lanzou Cloud Download

-

Open the installer to install.

-

(Optional) LLM API Configuration, choose whether to enable subtitle optimization or subtitle translation.

-

Drag and drop the video file into the software window for fully automatic processing.

Note: Each step supports independent processing and file drag-and-drop.

For MacOS Users

Due to my lack of a Mac, I cannot test and package for MacOS. MacOS executables are temporarily unavailable.

Mac users, please download the source code and install Python dependencies to run. (Local Whisper functionality is currently not supported on MacOS.)

- Install ffmpeg and Aria2 download tools

brew install ffmpeg brew install aria2 brew install python@3.**

- Clone the project

git clone https://github.com/WEIFENG2333/VideoCaptioner.git

cd VideoCaptioner

- Install dependencies

python3.** -m venv venv

source venv/bin/activate

pip install -r requirements.txt

- Run the program

python main.py

Docker Deployment (beta)

The current application is relatively basic. We welcome PR contributions.

git clone https://github.com/WEIFENG2333/VideoCaptioner.git

cd VideoCaptioner

docker build -t video-captioner .

Run with custom API configuration:

docker run -d \

-p 8501:8501 \

-v $(pwd)/temp:/app/temp \

-e OPENAI_BASE_URL="Your API address" \

-e OPENAI_API_KEY="Your API key" \

--name video-captioner \

video-captioner

Open your browser and go to: http://localhost:8501

- The container already includes necessary dependencies like ffmpeg.

- If you need to use other models, please configure them through environment variables.

The software fully utilizes the advantages of Large Language Models (LLMs) in understanding context to further process subtitles generated by speech recognition. It effectively corrects typos, unifies terminology, and makes the subtitle content more accurate and coherent, providing users with an excellent viewing experience!

- Supports mainstream video platforms at home and abroad (Bilibili, YouTube, etc.)

- Automatically extracts and processes the original subtitles of the video.

- Provides multiple online recognition interfaces with effects comparable to Jianying (free, high-speed).

- Supports local Whisper model (privacy protection, offline).

- Automatically optimizes the format of terminology, code snippets, and mathematical formulas.

- Contextual sentence segmentation optimization to improve reading experience.

- Supports manuscript prompts, using original manuscripts or related prompts to optimize subtitle segmentation.

- Context-aware intelligent translation ensures that the translation takes the entire text into account.

- Guides the large model to reflect on the translation through prompts, improving translation quality.

- Uses a sequence fuzzy matching algorithm to ensure complete consistency of the timeline.

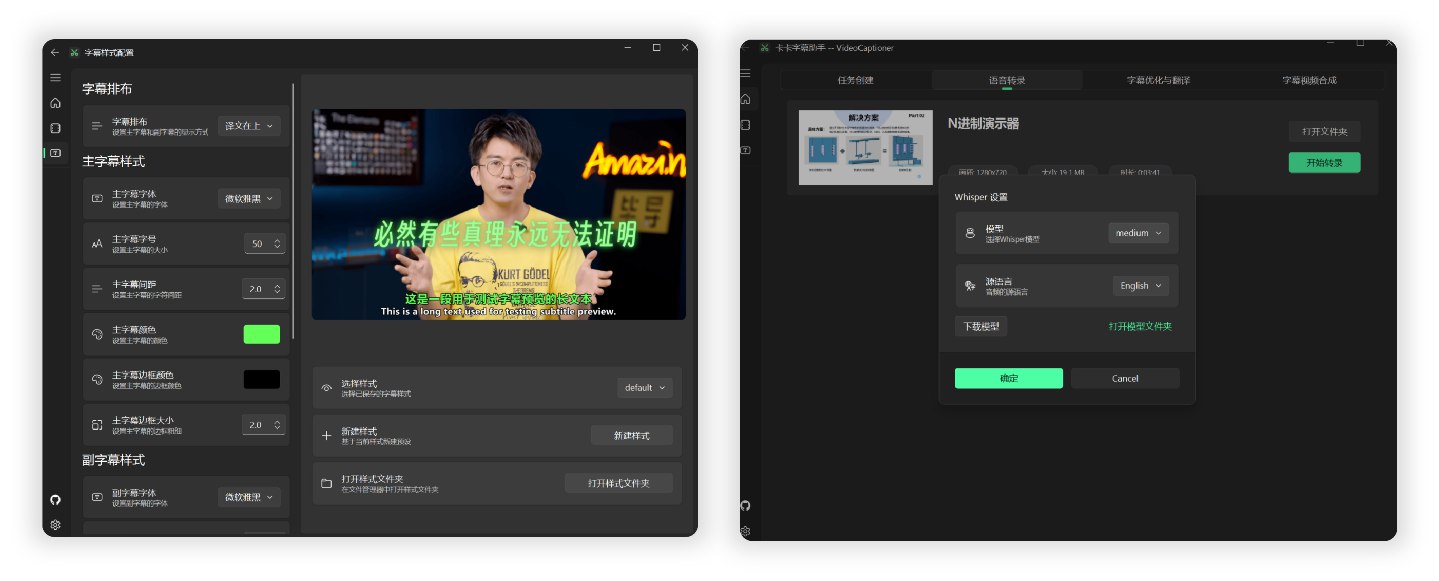

- Rich subtitle style templates (popular science style, news style, anime style, etc.).

- Multiple subtitle video formats (SRT, ASS, VTT, TXT).

| Configuration Item | Description |

|---|---|

| Built-in Model | The software includes a basic large language model (gpt-4o-mini), which can be used without configuration (public service is unstable). |

| API Support | Supports standard OpenAI API format. Compatible with SiliconCloud, DeepSeek, Ollama, etc. For configuration methods, please refer to the Configuration Documentation. |

Recommended models: For higher quality, choose Claude-3.5-sonnet or gpt-4o.

There are two Whisper versions: WhisperCpp and fasterWhisper (recommended). The latter has better performance and both require downloading models within the software.

| Model | Disk Space | RAM Usage | Description |

|---|---|---|---|

| Tiny | 75 MiB | ~273 MB | Transcription is mediocre, for testing only. |

| Small | 466 MiB | ~852 MB | English recognition is already good. |

| Medium | 1.5 GiB | ~2.1 GB | This version is recommended as the minimum for Chinese recognition. |

| Large-v1/v2 👍 | 2.9 GiB | ~3.9 GB | Good performance, recommended if your configuration allows. |

| Large-v3 | 2.9 GiB | ~3.9 GB | Community feedback suggests potential hallucination/subtitle repetition issues. |

Recommended model: Large-v1 is stable and of good quality.

Note: The above models can be downloaded directly within the software using a domestic network; both GPU and integrated graphics are supported.

- On the "Subtitle Optimization and Translation" page, there is a "Manuscript Matching" option, which supports the following one or more types of content to assist in subtitle correction and translation:

| Type | Description | Example |

|---|---|---|

| Glossary | Correction table for terminology, names, and specific words. | 机器学习->Machine Learning 马斯克->Elon Musk 打call -> Cheer on Turing patterns Bus paradox |

| Original Subtitle Text | The original manuscript or related content of the video. | Complete speech scripts, lecture notes, etc. |

| Correction Requirements | Specific correction requirements related to the content. | Unify personal pronouns, standardize terminology, etc. Fill in requirements related to the content, example reference |

- If you need manuscript assistance for subtitle optimization, fill in the manuscript information first, then start the task processing.

- Note: When using small LLM models with limited context, it is recommended to keep the manuscript content within 1000 words. If using a model with a larger context window, you can appropriately increase the manuscript content.

| Interface Name | Supported Languages | Running Mode | Description |

|---|---|---|---|

| Interface B | Chinese, English | Online | Free, fast |

| Interface J | Chinese, English | Online | Free, fast |

| WhisperCpp | Chinese, Japanese, Korean, English, and 99 other languages. Good performance for foreign languages. | Local | (Actual use is unstable) Requires downloading transcription models. Chinese: Medium or larger model recommended. English, etc.: Smaller models can achieve good results. |

| fasterWhisper 👍 | Chinese, English, and 99 other languages. Excellent performance for foreign languages, more accurate timeline. | Local | (🌟Highly Recommended🌟) Requires downloading the program and transcription models. Supports CUDA, faster, accurate transcription. Super accurate timestamp subtitles. Prioritize using this. |

If you encounter the following situations when using the URL download function:

- The video website requires login information to download.

- Only lower resolution videos can be downloaded.

- Verification is required when network conditions are poor.

- Please refer to the Cookie Configuration Instructions to obtain cookie information and place the

cookies.txtfile in theAppDatadirectory of the software installation directory to download high-quality videos normally.

The simple processing flow of the program is as follows:

Speech Recognition -> Subtitle Segmentation (optional) -> Subtitle Optimization & Translation (optional) -> Subtitle & Video Synthesis

The main directory structure after installing the software is as follows:

VideoCaptioner/ ├── runtime/ # Runtime environment directory (do not modify) ├── resources/ # Software resource file directory (binaries, icons, etc., and downloaded faster-whisper program) ├── work-dir/ # Working directory, where processed videos and subtitle files are saved ├── AppData/ # Application data directory ├── cache/ # Cache directory, caching transcription and large model request data. ├── models/ # Stores Whisper model files ├── logs/ # Log directory, recording software running status ├── settings.json # Stores user settings └── cookies.txt # Cookie information for video platforms (required for downloading high-definition videos) └── VideoCaptioner.exe # Main program executable file

-

The quality of subtitle segmentation is crucial for the viewing experience. For this, I developed SubtitleSpliter, which can intelligently reorganize word-by-word subtitles into paragraphs that conform to natural language habits and perfectly synchronize with the video frames.

-

During processing, only the text content is sent to the large language model, without timeline information, which greatly reduces processing overhead.

-

In the translation stage, we adopt the "translate-reflect-translate" methodology proposed by Andrew Ng. This iterative optimization method not only ensures the accuracy of the translation.

The author is a junior college student. Both my personal abilities and the project have many shortcomings. The project is also constantly being improved. If you encounter any bugs during use, please feel free to submit Issues and Pull Requests to help improve the project.

2024.1.22

- Complete code architecture refactoring, optimizing overall performance

- Subtitle optimization and translation function modules are separated, providing more flexible processing options

- Added batch processing function: supports batch subtitles, batch transcription, batch subtitle video synthesis

- Comprehensive optimization of UI interface and interaction details

- Expanded LLM support: Added SiliconCloud, DeepSeek, Ollama, Gemini, ChatGLM, and other models

- Integrated multiple translation services: DeepLx, Bing, Google, LLM

- Added faster-whisper-large-v3-turbo model support

- Added multiple VAD (Voice Activity Detection) methods

- Support custom reflection translation switch

- Subtitle segmentation supports semantic/sentence modes

- Optimization of prompts for subtitle segmentation, optimization, and translation

- Optimization of subtitle and transcription caching mechanism

- Improved automatic line wrapping for Chinese subtitles

- Added vertical subtitle style

- Improved subtitle timeline switching mechanism to eliminate flickering issues

- Fixed the issue where Whisper API could not be used

- Added support for multiple subtitle video formats

- Fixed the problem of transcription errors in some cases

- Optimized video working directory structure

- Added log viewing function

- Added subtitle optimization for Thai, German, and other languages

- Fixed many bugs...

2024.12.07

- Added Faster-whisper support, better audio-to-subtitle quality

- Support for Vad voice breakpoint detection, greatly reducing hallucination phenomena

- Support for vocal separation, separating video background noise

- Support for turning off video synthesis

- Added maximum subtitle length setting

- Added setting to remove punctuation at the end of subtitles

- Optimization of prompts for optimization and translation

- Optimized LLM subtitle segmentation errors

- Fixed inconsistent audio conversion format issues

2024.11.23

- Added Whisper-v3 model support, greatly improving speech recognition accuracy

- Optimized subtitle segmentation algorithm, providing a more natural reading experience

- Fixed stability issues when detecting model availability

2024.11.20

- Support custom adjustment of subtitle position and style

- Added real-time log viewing for the subtitle optimization and translation process

- Fixed automatic translation issues when using the API

- Optimized video working directory structure, improving file management efficiency

2024.11.17

- Support flexible export of bilingual/monolingual subtitles

- Added alignment function for manuscript matching prompts

- Fixed stability issues when importing subtitles

- Fixed compatibility issues with non-Chinese paths for model downloads

2024.11.13

- Added Whisper API call support

- Support importing cookie.txt to download resources from major video platforms

- Subtitle file names are automatically consistent with video file names

- Added real-time viewing of running logs on the software homepage

- Unified and improved internal software functions

If you find this project helpful, please give it a Star. This will be the greatest encouragement and support for me!